Jiarui Jin

ECG-R1: Protocol-Guided and Modality-Agnostic MLLM for Reliable ECG Interpretation

Feb 04, 2026Abstract:Electrocardiography (ECG) serves as an indispensable diagnostic tool in clinical practice, yet existing multimodal large language models (MLLMs) remain unreliable for ECG interpretation, often producing plausible but clinically incorrect analyses. To address this, we propose ECG-R1, the first reasoning MLLM designed for reliable ECG interpretation via three innovations. First, we construct the interpretation corpus using \textit{Protocol-Guided Instruction Data Generation}, grounding interpretation in measurable ECG features and monograph-defined quantitative thresholds and diagnostic logic. Second, we present a modality-decoupled architecture with \textit{Interleaved Modality Dropout} to improve robustness and cross-modal consistency when either the ECG signal or ECG image is missing. Third, we present \textit{Reinforcement Learning with ECG Diagnostic Evidence Rewards} to strengthen evidence-grounded ECG interpretation. Additionally, we systematically evaluate the ECG interpretation capabilities of proprietary, open-source, and medical MLLMs, and provide the first quantitative evidence that severe hallucinations are widespread, suggesting that the public should not directly trust these outputs without independent verification. Code and data are publicly available at \href{https://github.com/PKUDigitalHealth/ECG-R1}{here}, and an online platform can be accessed at \href{http://ai.heartvoice.com.cn/ECG-R1/}{here}.

ECGFlowCMR: Pretraining with ECG-Generated Cine CMR Improves Cardiac Disease Classification and Phenotype Prediction

Jan 28, 2026Abstract:Cardiac Magnetic Resonance (CMR) imaging provides a comprehensive assessment of cardiac structure and function but remains constrained by high acquisition costs and reliance on expert annotations, limiting the availability of large-scale labeled datasets. In contrast, electrocardiograms (ECGs) are inexpensive, widely accessible, and offer a promising modality for conditioning the generative synthesis of cine CMR. To this end, we propose ECGFlowCMR, a novel ECG-to-CMR generative framework that integrates a Phase-Aware Masked Autoencoder (PA-MAE) and an Anatomy-Motion Disentangled Flow (AMDF) to address two fundamental challenges: (1) the cross-modal temporal mismatch between multi-beat ECG recordings and single-cycle CMR sequences, and (2) the anatomical observability gap due to the limited structural information inherent in ECGs. Extensive experiments on the UK Biobank and a proprietary clinical dataset demonstrate that ECGFlowCMR can generate realistic cine CMR sequences from ECG inputs, enabling scalable pretraining and improving performance on downstream cardiac disease classification and phenotype prediction tasks.

Agent2World: Learning to Generate Symbolic World Models via Adaptive Multi-Agent Feedback

Dec 26, 2025Abstract:Symbolic world models (e.g., PDDL domains or executable simulators) are central to model-based planning, but training LLMs to generate such world models is limited by the lack of large-scale verifiable supervision. Current approaches rely primarily on static validation methods that fail to catch behavior-level errors arising from interactive execution. In this paper, we propose Agent2World, a tool-augmented multi-agent framework that achieves strong inference-time world-model generation and also serves as a data engine for supervised fine-tuning, by grounding generation in multi-agent feedback. Agent2World follows a three-stage pipeline: (i) A Deep Researcher agent performs knowledge synthesis by web searching to address specification gaps; (ii) A Model Developer agent implements executable world models; And (iii) a specialized Testing Team conducts adaptive unit testing and simulation-based validation. Agent2World demonstrates superior inference-time performance across three benchmarks spanning both Planning Domain Definition Language (PDDL) and executable code representations, achieving consistent state-of-the-art results. Beyond inference, Testing Team serves as an interactive environment for the Model Developer, providing behavior-aware adaptive feedback that yields multi-turn training trajectories. The model fine-tuned on these trajectories substantially improves world-model generation, yielding an average relative gain of 30.95% over the same model before training. Project page: https://agent2world.github.io.

Fints: Efficient Inference-Time Personalization for LLMs with Fine-Grained Instance-Tailored Steering

Oct 31, 2025Abstract:The rapid evolution of large language models (LLMs) has intensified the demand for effective personalization techniques that can adapt model behavior to individual user preferences. Despite the non-parametric methods utilizing the in-context learning ability of LLMs, recent parametric adaptation methods, including personalized parameter-efficient fine-tuning and reward modeling emerge. However, these methods face limitations in handling dynamic user patterns and high data sparsity scenarios, due to low adaptability and data efficiency. To address these challenges, we propose a fine-grained and instance-tailored steering framework that dynamically generates sample-level interference vectors from user data and injects them into the model's forward pass for personalized adaptation. Our approach introduces two key technical innovations: a fine-grained steering component that captures nuanced signals by hooking activations from attention and MLP layers, and an input-aware aggregation module that synthesizes these signals into contextually relevant enhancements. The method demonstrates high flexibility and data efficiency, excelling in fast-changing distribution and high data sparsity scenarios. In addition, the proposed method is orthogonal to existing methods and operates as a plug-in component compatible with different personalization techniques. Extensive experiments across diverse scenarios--including short-to-long text generation, and web function calling--validate the effectiveness and compatibility of our approach. Results show that our method significantly enhances personalization performance in fast-shifting environments while maintaining robustness across varying interaction modes and context lengths. Implementation is available at https://github.com/KounianhuaDu/Fints.

DeepAgent: A General Reasoning Agent with Scalable Toolsets

Oct 24, 2025Abstract:Large reasoning models have demonstrated strong problem-solving abilities, yet real-world tasks often require external tools and long-horizon interactions. Existing agent frameworks typically follow predefined workflows, which limit autonomous and global task completion. In this paper, we introduce DeepAgent, an end-to-end deep reasoning agent that performs autonomous thinking, tool discovery, and action execution within a single, coherent reasoning process. To address the challenges of long-horizon interactions, particularly the context length explosion from multiple tool calls and the accumulation of interaction history, we introduce an autonomous memory folding mechanism that compresses past interactions into structured episodic, working, and tool memories, reducing error accumulation while preserving critical information. To teach general-purpose tool use efficiently and stably, we develop an end-to-end reinforcement learning strategy, namely ToolPO, that leverages LLM-simulated APIs and applies tool-call advantage attribution to assign fine-grained credit to the tool invocation tokens. Extensive experiments on eight benchmarks, including general tool-use tasks (ToolBench, API-Bank, TMDB, Spotify, ToolHop) and downstream applications (ALFWorld, WebShop, GAIA, HLE), demonstrate that DeepAgent consistently outperforms baselines across both labeled-tool and open-set tool retrieval scenarios. This work takes a step toward more general and capable agents for real-world applications. The code and demo are available at https://github.com/RUC-NLPIR/DeepAgent.

A Metric for MLLM Alignment in Large-scale Recommendation

Aug 07, 2025

Abstract:Multimodal recommendation has emerged as a critical technique in modern recommender systems, leveraging content representations from advanced multimodal large language models (MLLMs). To ensure these representations are well-adapted, alignment with the recommender system is essential. However, evaluating the alignment of MLLMs for recommendation presents significant challenges due to three key issues: (1) static benchmarks are inaccurate because of the dynamism in real-world applications, (2) evaluations with online system, while accurate, are prohibitively expensive at scale, and (3) conventional metrics fail to provide actionable insights when learned representations underperform. To address these challenges, we propose the Leakage Impact Score (LIS), a novel metric for multimodal recommendation. Rather than directly assessing MLLMs, LIS efficiently measures the upper bound of preference data. We also share practical insights on deploying MLLMs with LIS in real-world scenarios. Online A/B tests on both Content Feed and Display Ads of Xiaohongshu's Explore Feed production demonstrate the effectiveness of our proposed method, showing significant improvements in user spent time and advertiser value.

Large Language Models are Demonstration Pre-Selectors for Themselves

Jun 06, 2025

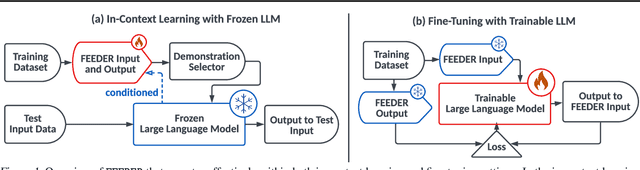

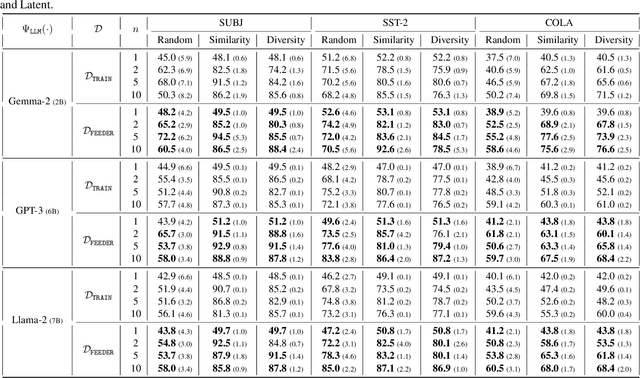

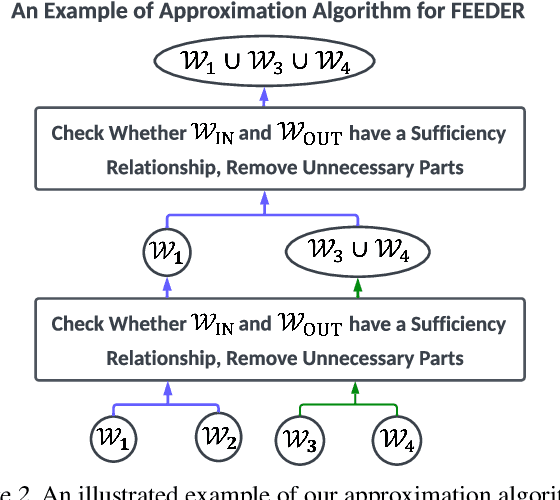

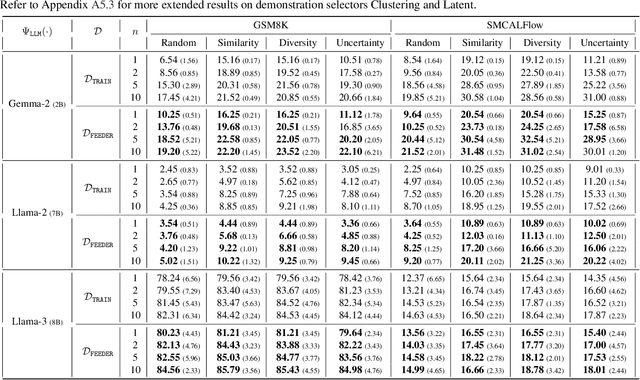

Abstract:In-context learning (ICL) with large language models (LLMs) delivers strong few-shot performance by choosing few-shot demonstrations from the entire training data. However, existing ICL methods, which rely on similarity or diversity scores to choose demonstrations, incur high computational costs due to repeatedly retrieval from large-scale datasets for each query. To this end, we propose FEEDER (FEw yet Essential Demonstration prE-selectoR), a novel pre-selection framework that identifies a representative subset of demonstrations containing the most representative examples in the training data, tailored to specific LLMs. To construct this subset, we introduce the "sufficiency" and "necessity" metrics in the pre-selection stage and design a tree-based algorithm to identify representative examples efficiently. Once pre-selected, this representative subset can effectively replace the full training data, improving efficiency while maintaining comparable performance in ICL. Additionally, our pre-selected subset also benefits fine-tuning LLMs, where we introduce a bi-level optimization method that enhances training efficiency without sacrificing performance. Experiments with LLMs ranging from 300M to 8B parameters show that FEEDER can reduce training data size by over 20% while maintaining performance and seamlessly integrating with various downstream demonstration selection strategies in ICL.

Why Not Together? A Multiple-Round Recommender System for Queries and Items

Dec 14, 2024

Abstract:A fundamental technique of recommender systems involves modeling user preferences, where queries and items are widely used as symbolic representations of user interests. Queries delineate user needs at an abstract level, providing a high-level description, whereas items operate on a more specific and concrete level, representing the granular facets of user preference. While practical, both query and item recommendations encounter the challenge of sparse user feedback. To this end, we propose a novel approach named Multiple-round Auto Guess-and-Update System (MAGUS) that capitalizes on the synergies between both types, allowing us to leverage both query and item information to form user interests. This integrated system introduces a recursive framework that could be applied to any recommendation method to exploit queries and items in historical interactions and to provide recommendations for both queries and items in each interaction round. Empirical results from testing 12 different recommendation methods demonstrate that integrating queries into item recommendations via MAGUS significantly enhances the efficiency, with which users can identify their preferred items during multiple-round interactions.

Learning ID-free Item Representation with Token Crossing for Multimodal Recommendation

Oct 25, 2024

Abstract:Current multimodal recommendation models have extensively explored the effective utilization of multimodal information; however, their reliance on ID embeddings remains a performance bottleneck. Even with the assistance of multimodal information, optimizing ID embeddings remains challenging for ID-based Multimodal Recommender when interaction data is sparse. Furthermore, the unique nature of item-specific ID embeddings hinders the information exchange among related items and the spatial requirement of ID embeddings increases with the scale of item. Based on these limitations, we propose an ID-free MultimOdal TOken Representation scheme named MOTOR that represents each item using learnable multimodal tokens and connects them through shared tokens. Specifically, we first employ product quantization to discretize each item's multimodal features (e.g., images, text) into discrete token IDs. We then interpret the token embeddings corresponding to these token IDs as implicit item features, introducing a new Token Cross Network to capture the implicit interaction patterns among these tokens. The resulting representations can replace the original ID embeddings and transform the original ID-based multimodal recommender into ID-free system, without introducing any additional loss design. MOTOR reduces the overall space requirements of these models, facilitating information interaction among related items, while also significantly enhancing the model's recommendation capability. Extensive experiments on nine mainstream models demonstrate the significant performance improvement achieved by MOTOR, highlighting its effectiveness in enhancing multimodal recommendation systems.

DRepMRec: A Dual Representation Learning Framework for Multimodal Recommendation

Apr 17, 2024

Abstract:Multimodal Recommendation focuses mainly on how to effectively integrate behavior and multimodal information in the recommendation task. Previous works suffer from two major issues. Firstly, the training process tightly couples the behavior module and multimodal module by jointly optimizing them using the sharing model parameters, which leads to suboptimal performance since behavior signals and modality signals often provide opposite guidance for the parameters updates. Secondly, previous approaches fail to take into account the significant distribution differences between behavior and modality when they attempt to fuse behavior and modality information. This resulted in a misalignment between the representations of behavior and modality. To address these challenges, in this paper, we propose a novel Dual Representation learning framework for Multimodal Recommendation called DRepMRec, which introduce separate dual lines for coupling problem and Behavior-Modal Alignment (BMA) for misalignment problem. Specifically, DRepMRec leverages two independent lines of representation learning to calculate behavior and modal representations. After obtaining separate behavior and modal representations, we design a Behavior-Modal Alignment Module (BMA) to align and fuse the dual representations to solve the misalignment problem. Furthermore, we integrate the BMA into other recommendation models, resulting in consistent performance improvements. To ensure dual representations maintain their semantic independence during alignment, we introduce Similarity-Supervised Signal (SSS) for representation learning. We conduct extensive experiments on three public datasets and our method achieves state-of-the-art (SOTA) results. The source code will be available upon acceptance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge